Hi friends 👋 Welcome to February. Can you believe 2025 is already 9% complete? Well, not to brag, but my goals for this year and New Year's resolutions are already 100% abandoned.

I want to start with a big thank you to everyone who replied to the last newsletter with encouraging words about the new "Untitled Interviews Project" I'm working on. I really appreciate your support and I'm excited to share more about it soon. I even purchased a new domain name for it, so I guess I'm fully committed now.

Also, some of you reached out wanting to learn more about the note-taking and organization system I briefly mentioned last month. I'll be writing something about that too, so keep an eye on a future issue of the newsletter for the link.

This week we'll talk about estimating software projects, why we are so bad at it, and how we can more accurately predict the future without a crystal ball.

Let's dive in.

MAXI'S RAMBLINGS

Estimating Invisible Work

You know that feeling when your boss asks you for an estimate on a project, you tell them it’ll take two weeks, and then exactly two weeks later, you deliver the project fully up-to-spec, free of bugs, and with a complete test suite?

No? That really never happened to you?

Yeah, I know. It never happened to me either. When I say something is going to take two weeks, what I usually mean is that in two weeks I’ll be about 80% done… and all I’ll have left is the other 80%.

There’s a big chance this will resonate with your own experience because, well, we’re all equally bad at estimating projects—especially the large, messy ones that ask us to do things we’ve never done before. Of course, we do get our estimates right sometimes, but unless we somehow develop the ability to predict the future, estimating is not a skill we can consistently rely on.

It’s like I always say: there are only two kinds of people, those who are bad at estimating and those who are just lucky.

Ok, I never actually said that. But still, it kinda rings true, doesn’t it? It’s like there’s a layer of invisible work that always gets unaccounted for, and unless we lucked out with our estimates, we’re pretty much guaranteed to miss the mark.

So today I want to spend a bit of time talking about what this invisible work looks like, and what, if anything, we can do to consider it in our estimates.

But first, let’s answer a more fundamental question—why are we so bad at estimating software projects?

Why are we so bad at estimating?

Invisible work and our inability to predict the future surely get in the way of coming up with accurate estimates, but there’s one more thing that explains why we’re all so bad at it—our overconfidence.

In a 1994 study, a group of researchers asked students to estimate how long it would take them to finish their senior theses—the average estimate was about 34 days. They also asked the students for a “best case” estimate if everything went as smoothly as possible and a “worst case” estimate if everything went as poorly as possible, to which the students estimated 27 and 48 days, respectively, on average.

What was the actual average completion time of the theses? 55 days, with the majority of students taking longer than their “worst case” estimate.

Scientists call this phenomenon the planning fallacy, and it’s just one of our many overconfidence biases. The planning fallacy causes us to be overly optimistic with our estimates even when there’s no reward for it, and it explains something we instinctively know: all of us, from psychology students to software engineers, are absolutely terrible at estimating complex projects.

I’m sure this isn’t shocking news to anybody, but there’s one aspect of this study (and the many others that have researched this fallacy over the years) that is worth calling out: It’s not just that we’re bad at estimating tasks and projects—it’s that we’re very good at under-estimating them. Or in other words, we have a strong tendency to overestimate our ability to complete projects on time.

To compensate for this overconfidence, a common piece of advice is to give some “padding” to our estimates. Some might suggest to double, triple, or even quadruple whatever time we initially think it will take. And, to be fair, these inflated estimates tend to be more accurate than our original ones (especially for larger projects), but they can suffer from an equally damaging and much more sneaky problem—Parkinson’s Law.

According to Parkinson’s Law, “work expands so as to fill the time available for its completion.” If we give ourselves four weeks to complete something we could have easily done in three, chances are it will take us the full four weeks.

Sure, a super-inflated estimate would technically be “accurate” if we manage to deliver the project on time, but that doesn’t mean we used that time as efficiently as possible. Plus, there’s a limit to how much padding we can add to a project or task without raising some eyebrows. If every time our boss asks us to update the color of a button, our reply is “You got it boss, it'll take me about 10 weeks”, our estimates might be accurate, but we’re going to have a hard time keeping that job for too much longer.

This is why estimating projects is so hard. It’s like we have two opposing forces working against us—one encouraging us to give optimistic estimates and another one punishing us with inefficiency when we try to compensate for our optimism. No matter what estimate we give, it feels like it’s always going to be wrong.

But not all is lost. Our estimates might never be perfectly accurate 100% of the time, but there are things that can help us come up with more realistic ones. It all starts by taking a deeper look at that invisible work we talked about earlier.

Making the invisible visible

Invisible work isn’t really invisible—it’s just that we tend to ignore it or underestimate it in our estimates.

Adding some padding to our estimates works well because it recognizes that this invisible work exists, that unexpected things will eventually come up, and that tasks will always take longer than we initially thought. But how do we know how much padding we should add? How do we know when to double and when to triple our estimates? And how can we avoid the trap of giving it too much padding and suffering the consequences of Parkinson's Law?

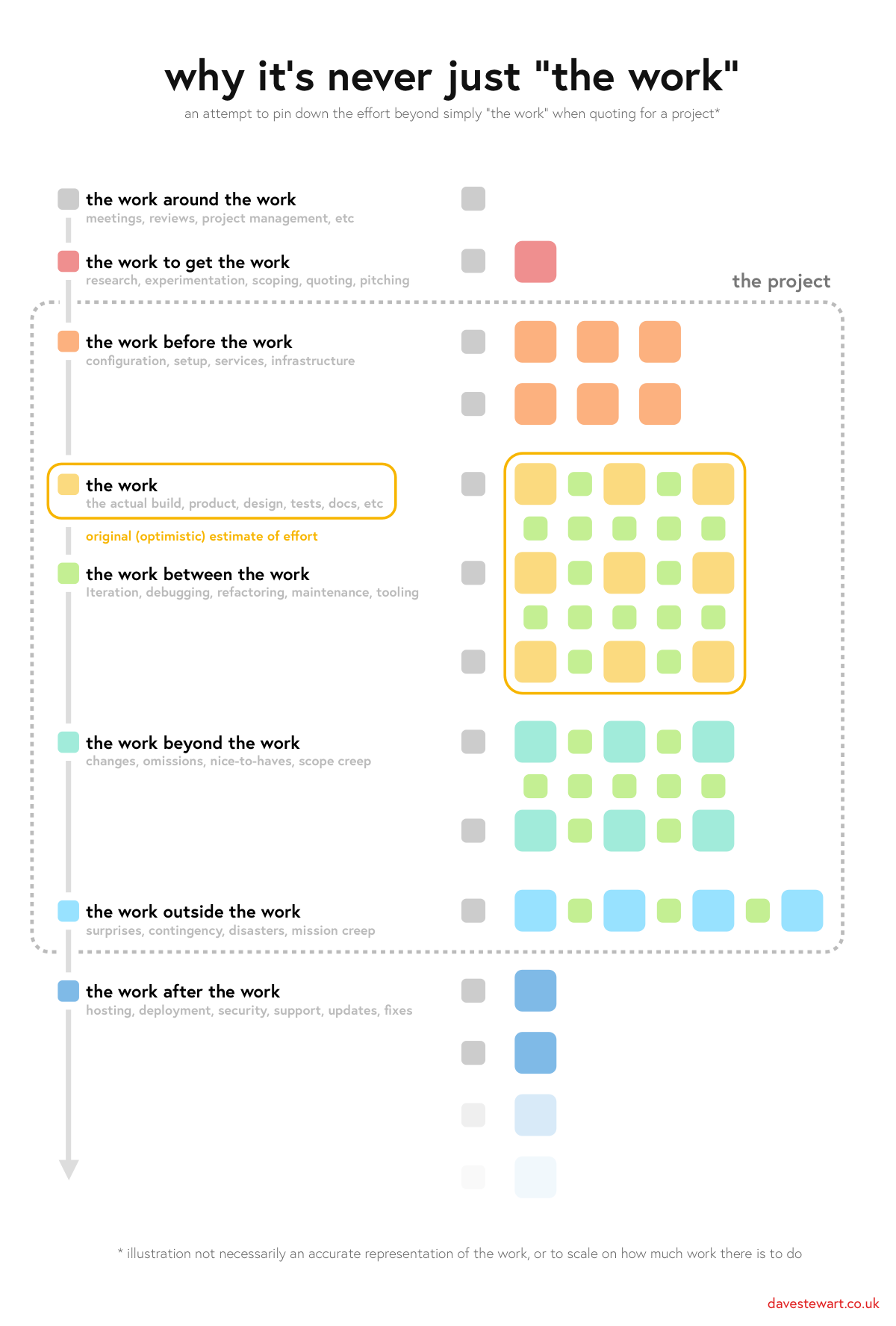

To answer these questions, we need to get more specific about what this invisible work looks like. And my favorite way to do that is by using Dave Stewart's framework explaining why The work is never just “the work”.

In his great 2022 essay, Dave helps us make invisible work more tangible by breaking down the total amount of work that goes into a software project into eight categories:

- 1️⃣ The work around the work — meetings, reviews, project management

- 2️⃣ The work to get the work — research, experimentation, scoping, quoting, pitching

- 3️⃣ The work before the work — configuration, setup, services, infrastructure

- 4️⃣ The work — the actual build, design, and testing of the product

- 5️⃣ The work between the work — iteration, debugging, refactoring, maintenance, tooling

- 6️⃣ The work beyond the work — changes, omissions, nice-to-haves, scope creep

- 7️⃣ The work outside the work — surprises, contingency, disasters, mission creep

- 8️⃣ The work after the work — hosting, deployment, security, support, updates, fixes

Not every project has all of these different types of work, of course. But the larger and more complex the project, the more different types of “work” it’ll require.

Even for small projects, chances are they will involve at least 3 or 4 different types of work. Say we think a project would take us about 40 hours of coding; giving an estimate of "a week" assumes that we’ll be able to code for 8 hours a day without any meetings, code reviews, interruptions, context-switching, or endless emails and Slack messages to reply to. Unless you work completely by yourself, that’s a very unlikely scenario.

What I like the most about Dave’s framework is that we can use his breakdown as a checklist anytime we sit down to estimate a project. We might have a clear idea of how long “the work” (coding, design, testing) will take, but what about the rest?

What research do we need to do before starting to tackle this project? What new infrastructure is required? Do we have any dependencies with other teams? What’s our plan for when bugs start to come in?

This exercise clearly isn’t an exact science, but asking ourselves these questions will shed some light on areas we might have previously ignored, resulting in an estimate we’ll feel much more comfortable with.

So next time you have to do the very hard work of trying to predict the future and come up with a project estimate, I encourage you to give Dave’s checklist a try. It’ll help you come up with a more accurate estimate and maybe, just maybe, deliver your next project on time.

ARCHITECTURE SNACKS

Links Worth Checking Out

- Kent Beck & Beth Andres-Beck gave a keynote presentation at the Øredev Conference called We're Good At Writing Software. I don't think they would have named it that way if they saw some of the software I wrote, but I appreciate their optimism. In their talk, Kent and Beth explain their Desert and Forest analogy and share tons of great advice for getting out of the desert and reaching the bug-free, always productive forest.

- Jimmy Miller wrote about the benefits of Discovery Coding, the practice of writing some of the code first instead of starting with a complete design.

- You might be surprised to learn that the modern way of writing JavaScript servers involves using something that has been around for almost a decade—the Request/Response API.

- Brenley Dueck wrote an article about server functions, why they matter, and how they differ from server actions and server components. I appreciate his article, but I honestly don't get why we need an explanation because all of these names aren't confusing at all.

- Great overview by Sandro Maglione on all the different methods we have to manage state in React applications before reaching for third-party libraries.

- Laurie Voss, the VP of Developer Relations at LlamaIndex, wrote about everything he learned about writing AI apps so far. Laurie's conclusion is that "LLMs are awesome and limited," which very much resonates with my own experience working with them.

- Kelly Sutton shares his experience a year after moving away from React and replacing it with the Ruby on Rails library StimulusJS.

- Kind of random but interesting if you're a Pokémon nerd like me: last year, a bunch of early prototypes of Pokémon trading cards started showing up in public auctions and sold for tons of money. Turns out that many of these prototypes weren't printed in the '90s as originally thought—but in 2024.

That’s all for today, friends! Thank you for making it all the way to the end. If you enjoyed the newsletter, it would mean the world to me if you’d share it with your friends and coworkers. (And if you didn't enjoy it, why not share it with an enemy?)

Did someone forward this to you? First of all, tell them how awesome they are, and then consider subscribing to the newsletter to get the next issue right in your inbox.

I read and reply to all of your comments. Feel free to reach out on Twitter, LinkedIn, or reply to this email directly with any feedback or questions.

Have a great week 👋

– Maxi